Education

What is Penguin in SEO?

Published

2 years agoon

By

techonpc

SEO

One of Google’s most difficult jobs is maintaining a search engine that gives excellent results to any searcher’s query. Google uses an algorithm to generate the results that you see when you enter your search request. Like most technology, the algorithm has become more sophisticated over time, which gives Google the ability to fine-tune the Search Engine Results Pages (SERPs). Google algorithm updates are used to filter out spam content, and allow high-quality content to rank higher. Google continuously updates their search engine operation, but we only hear about the updates when they result in a significant change to SERPs. For an overview of Google’s update history, you can look at this Google Algorithm Update Guide.

In this article, we are going to look at one update, called Penguin. Let’s start with a little history.

A Little Google Update History

On April 11, 2011, Google ran the first Panda update. This update was created to filter out primarily content that was produced by content farms, sites that earned money by posting a lot of thin content. These sites were able to game Google’s algorithm using keyword strategies and rank high in SERPs, sometimes to the detriment of higher quality content. Google applied the update globally and it worked.

However, the search engine still had spammy content pages ranking higher than they should due to other black hat strategies. Content that had a lot of incoming links was ranked higher than better content with fewer incoming links. Content with keyword stuffing also was ranking higher than it should. This is what the Penguin update was created to fix.

Google’s Penguin Update

The first application of the Penguin update was run on May 25, 2012. And the update continued to be refined and re-applied through September 23, 2016. After that date, Penguin was added to the main Google algorithm.

Why Google Penguin?

Google’s Penguin update was specifically designed to filter out spammy content that was ranking higher than high-quality content by using black hat linking and keyword stuffing techniques. These spam pages of content were developed only to rank high in SERPs due to the number of links and/or keywords used. Therefore, the search engine needed to be able to filter out content pages that had lots of links from bad sources, and/or used keyword stuffing to rank artificially.

When your website or content page gets genuine backlinks (incoming links), it shows that other sites refer visitors to your site. The assumption is that you have relevant information to help visitors find what they’re searching for. The Penguin update actively looked at the type of links a page had. As noted in SearchEngine Journal,

“The algorithm’s objective was to gain greater control over and reduce the effectiveness of, a number of black hat spamming techniques.”

By better understanding and process the types of links websites and webmasters were earning, Penguin worked toward ensuring that natural, authoritative, and relevant links rewarded the websites they pointed to, while manipulative and spammy links were downgraded.

Penguin only deals with a site’s incoming links. Google only looks at the links pointing to the site in question and does not look at the outgoing links at all from that site.

Keyword stuffing is described by MOZ as “populating a webpage with large numbers of keywords or repetitions of keywords in an attempt to manipulate rank via the appearance of relevance to specific search phrases.” Keyword-stuffed content was literally stuffed with every possible keyword for a specific topic. While that may have been necessary at the beginning of internet search, Google has been capable of understanding context and relevance for quite some time.

The first Penguin rollout affected more than 3% of search results.

How to Optimize for Penguin

The most important key to optimizing for Penguin is to create content meant to be read by real people and not search engines. Google’s algorithms are capable of discerning the topic of your content without you needing to use a sledgehammer. Instead, Google understands your topic through context and other clues.

You should still create content with keywords as a focus, but use them naturally. Google can find related keywords in your content. Your primary goal with content creation should be to engage with visitors.

Does your content answer the question that your visitor is searching for?

Checking Your Backlinks

You can’t really control who links to your content, but you can find out where backlinks are coming from. Google’s Search Console has a lot of great features and one of them is a list of backlinks to your site. Just keep in mind that the list doesn’t tell you if they are “follow” or “no follow” links. You can also use other third-party tools, but it’s possible that some sites that link to you block those tools.

Disavow Spam Backlinks

If you do find spammy backlinks, Google recommends going to the source of the backlinks and then asking them to remove the link. If they refuse or you can’t contact them, you can disavow the link. However, John Meuller, Google Webmaster Trends Analyst, says that the disavow tool is a last resort, and is best used if Google takes manual action against your website or you have a history of using spammy backlinks.

Google Penguin can detect normal linking behavior and also an attack with negative SEO tactics. A negative SEO attack happens when a competitor links to you with spammy links to lower your rank in SERPs. This is obviously a black hat tactic, and often detected as such by Google.

However, it’s still possible for Google to take a manual or algorithmic action against your site. While it may not be due to Penguin, there are other algorithms that may unearth bad links.

Follow Me

Unleashing the Power of the Office Accelerator: Maximizing Productivity and Efficiency in the Workplace with Office 365 Accelerator

Unlocking the Hidden Potential of Your Website: Strategies for Growth

From AI to VR: How Cutting-Edge Tech Is Reshaping Personal Injury Law in Chicago

Trending

Microsoft4 years ago

Microsoft4 years agoMicrosoft Office 2016 Torrent With Product Keys (Free Download)

Torrent4 years ago

Torrent4 years agoLes 15 Meilleurs Sites De Téléchargement Direct De Films 2020

Money4 years ago

Money4 years ago25 Ways To Make Money Online

Torrent4 years ago

Torrent4 years agoFL Studio 12 Crack Télécharger la version complète fissurée 2020

Education3 years ago

Education3 years agoSignificado Dos Emojis Usado no WhatsApp

Technology4 years ago

Technology4 years agoAvantages d’acheter FL Studio 12

Technology4 years ago

Technology4 years agoDESKRIPSI DAN MANFAAT KURSUS PELATIHAN COREL DRAW

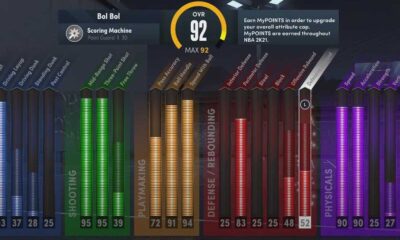

Education3 years ago

Education3 years agoBest Steph Curry NBA 2K21 Build – How To Make Attribute, Badges and Animation On Steph Curry Build 2K21

You must be logged in to post a comment Login